And unfortunately it’s a capitalistic one. Economic return takes precedence over everything, which means if something starts off as this bright pocket of creativity and uniqueness, it’ll eventually either get bought over by larger media conglomerates and dissolved into another bland property that is safe and guaranteed to turn a profit OR go bankrupt from refusing to conform. From a filmmaking perspective, that’s why it’s much easier to greenlight reboots, sequels and renew 50 seasons of reality TV** than invest in new ideas. They’re less risky and already have a track record for making money based on the performance of their predecessors. It’s endemic and leads to very unimaginative pieces of media. This type of model is quite literally the antithesis to creativity and it’s only a matter of time before it bursts. The real question is, what can we start doing now to build the foundations for new media-development practices that balance creative innovation and financial feasibility? (I don’t have an answer yet, but this is something that’s been on my mind a lot lately)

**No shade on reality TV– it can be hella entertaining, but I cant ignore the fact that there’s been a boom in this type of content since the pandemic and in the eyes of streaming platforms (aka Netflix being the biggest culprit), it’s much more prolific and infinitely easier to churn out than investing in developing an original limited series.

Nov 17, 2024

Related Recs

⭐

i feel like althoug content creation present itself like the oportunity of making nich art in practice all i see looks similar. Well lit pictures of happy people, modern web design or sexual ilustration.

I miss the weird internet, the hand held videocameras.

Oct 21, 2024

📰

anyone on pi.fyi will likely feel seen and heard when reading this. it basically accentuates all the redundancies and senseless aspects of social media and how it’s disrupted every industry and how we are all dominated by the algorithm and have to be our own hype person. i always feel like an idiot after i finish a record or a book that i'm really excited to share with the world, but then have to think about “content“ to promote the art itself. obviously pi.fyi feels likes a refreshing beacon of hope because artists can share their work here in a far more simple and wholesome manner. the article also addresses non-creative jobs like accountants and other professions that are all being forced to become an “influencer” of some sort or build a brand. it’s spooky, yet we’re all feeling the fatigue so hopefully we can see a less algorithmic future soon…

Feb 8, 2024

i think that large language models like chatgpt are effectively a neat trick we’ve taught computers to do that just so happen to be *really* helpful as a replacement for search engines; instead of indexing sources with the knowledge you’re interested in finding, it just indexes the knowledge itself. i think that there are a lot of conversations around how we can make information more “accessible” (both in terms of accessing paywalled knowledge and that knowledge’s presentation being intentionally obtuse and only easily parseable by other academics), but there are very little actual conversations about how llms could be implemented to easily address both kinds of accessibility.

because there isn’t a profit incentive to do so.

llms (and before them, blockchains - but that’s a separate convo) are just tools; but in the current economic landscape a tool isn’t useful if it can’t make money, so there’s this inverse law of the instrument happening where the owning class’s insistence that we only have nails in turn means we only build hammers. any new, hot, technological framework has to either slash costs for businesses by replacing human labor (like automating who sees what ads when and where), or drive a massive consumer adoption craze (like buying crypto or an oculus or an iphone.) with llms, it’s an arms race to build tools for businesses to reduce headcount by training base models on hyperspecific knowledge. it also excuses the ethical transgression of training these models on stolen knowledge / stolen art, because when has ethics ever stood in the way of making money?

the other big piece is tech literacy; there’s an incentive for founders and vcs to obscure (or just lie) about what a technology is actually capable of to increase the value of the product. the metaverse could “supplant the physical world.” crypto could “supplant our economic systems.” now llms are going to “supplant human labor and intelligence.” these are enticing stories for the owning class, because it gives them a New Thing that will enable them to own even more. but none of this tech can actually do that shit, which is why the booms around them bust in 6-18 months like clockwork. llms are a perfect implementation of [searle’s chinese room](https://plato.stanford.edu/entries/chinese-room/) but sam altman et al *insist* that artificial general intelligence is possible and the upper crust of silicon valley are doing moral panic at each other about how “ai” is either paramount to or catastrophic for human flourishing, *when all it can do is echo back the information that humans have already amassed over the course of the last ~600 years.* but most people (including the people funding the technology and ceo types attempting to adopt it en masse) don’t know how it works under the hood, so it’s easy to pilot the ship in whatever direction fulfills a profit incentive because we can’t meaningfully imagine how to use something we don’t effectively understand.

Mar 24, 2024

Top Recs from @verygoodvalentina

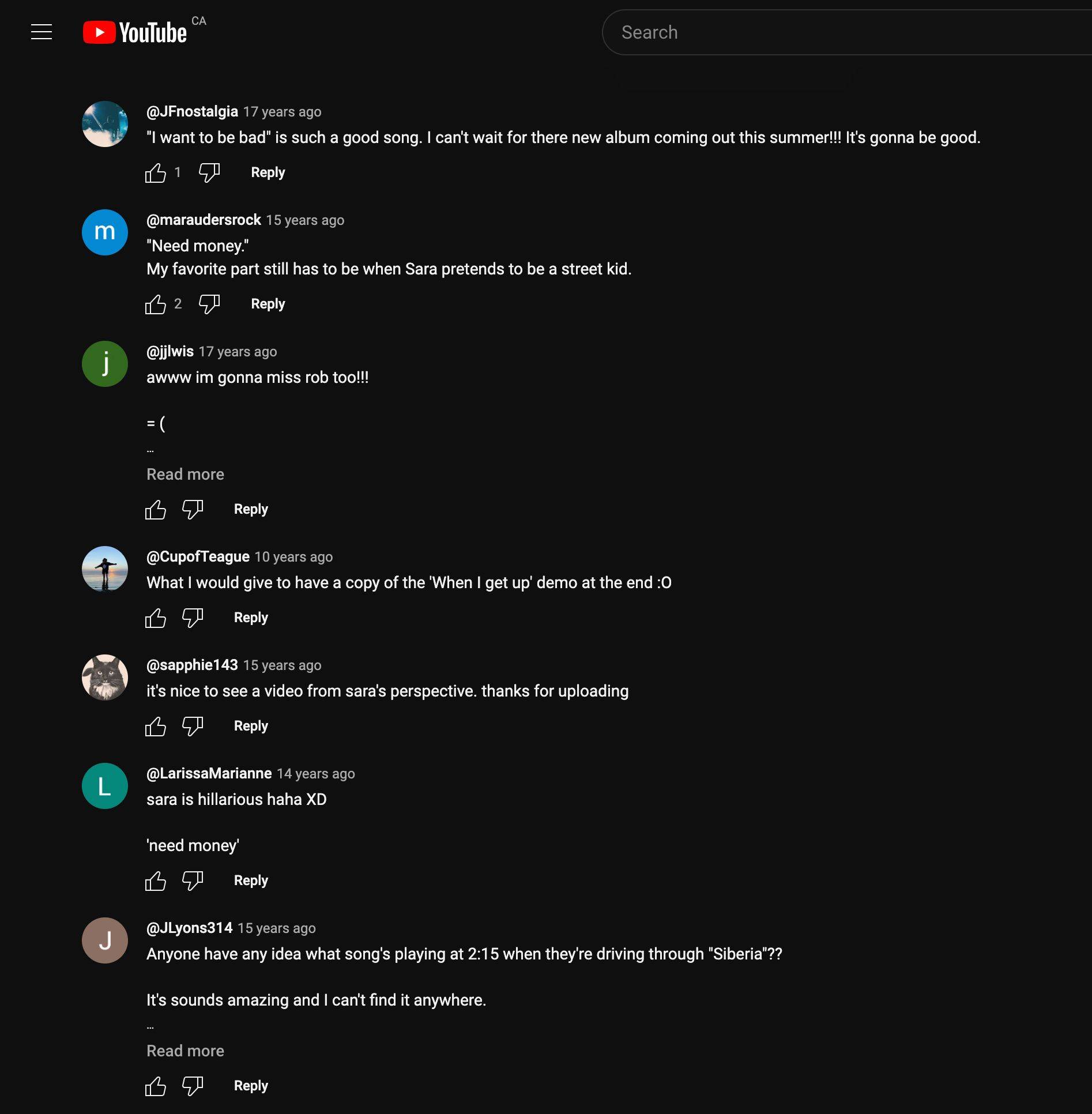

I adore finding a random video from like 2005 and reading through the comments the way a historian would examine an old manuscript from the 1700s. Are these people still active YouTube users? Or are they forgotten accounts? What did @jjlwis mean by "awww im gonna miss rob too!!!" ? Who even is Rob?? Anthropology in the digital age... so many questions... it's fascinating.

The important thing for me is not to add new comments. I feel like I'm disturbing an old archeological dig site and my sticky modern commentary will make the video crumble away into oblivion. More importantly, I don't want the algorithm to suggest the video to a bunch of people who will spam the comments section– major yuck 🤢

Jan 25, 2024

early 80s to early 2000s truck models are the perfect sizes imo. current trucks are transformer-sized behemoths that could easily crush normal vehicles into smithereens upon impact and i legit don’t know how those things are even street-legal. also, idk if it’s their design, reliability or the nostalgia factor per-se, but there’s a certain sazón those older trucks have that newer ones don’t.

2024 Ford F-150? 🤮🤢

1980 Ford F-150? 🫦🫦

Feb 9, 2025

🫂

with social media being this pervasive entity that has weeded its way into our daily routines for the past 20ish years (plus a global pandemic that really solidified those habits), many young adults today have spent a large amount of their lives living online. it has become the new norm and i’m not gonna pretend i’m above any of this because it’s so easy to fall into it (i am literally writing this rec on my phone whilst it’s a perfectly sunny day that i should probably go out to enjoy).

with that being said, in the larger scheme of life, being in your 20s is still in a weird way the beginning stages of your life. it’s a period to try new things, make mistakes, learn from them and develop an identity that’s independent from the environment and people who raised you. though you can learn to do some of those things online, they don’t hold a candle to actually experiencing those things for yourself in real life.

all in all, the best way to not sleep thru your 20s is to prioritize in-person experiences that allow you to get a better understanding of yourself and your values. whether that be getting your first tattoo, moving to a new city or country, exploring your personal style or taking up hobbies you couldn’t or would‘ve never done as a kid, this is an important formative time to venture out and get a sense of who you truly are.

Sep 30, 2024